Jump-to-Recipe: An LLM-powered AI assistant for recipes

By Ben Lafreniere, ~7 minute read

In this post, I'll demo an LLM-based user interface prototype I've been working on. The motivation for this project came while I was cooking – I had a recipe webpage loaded on my tablet, and I was trying to follow along but I kept getting distracted by video ads on the page. Even worse, the video ads would regularly reload and reflow the page, causing me to lose my place. Eventually, I got so frustrated that I paused the whole cooking project to copy and paste the recipe into a text file.

That experience got me thinking – LLMs can read recipe webpages as well as humans, and once the information is extracted it could be presented exactly the way I want it.

So I built Jump-to-Recipe – an LLM-powered AI assistant for recipes.

The user experience is pretty simple. You paste a recipe URL into the interface and click 'Extract Recipe'. The system reads the page, pulls out the important info, and displays it in a consistent format:

Now that I had an AI assistant capable of reading recipe webpages, I wanted to see how far I could push the concept.

To enable users to ask questions about the recipe, or other info on the page, I added a chat feature. For example, you can ask the system to “suggest improvements to the recipe, based on what's in the comments section”:

You can also request changes to the extracted recipe. A simple example is to “double the recipe”:

The flexibility of this approach makes it really fun. Some other requests I've tried:

- Annotate the recipe with dairy-free options

- Add an emoji for each recipe ingredient

- Add an estimate of the time to perform each step of the recipe

- Produce a grocery list of ingredients for this recipe

In the current prototype you have to type queries, but it would be pretty easy to add hands-free operation using off-the-shelf APIs for voice input and text-to-speech output. This would make the system even more useful while cooking, where it's common to have your hands dirty.

End user customization

The chat functionality is extremely flexible, but there are some modifications to recipes that I always want to see. As an example, I live in Canada, and products here are labeled in metric units, but it's pretty common to find recipes online that use US customary measures (a 12-oz can of tomato sauce is how many mL again?)

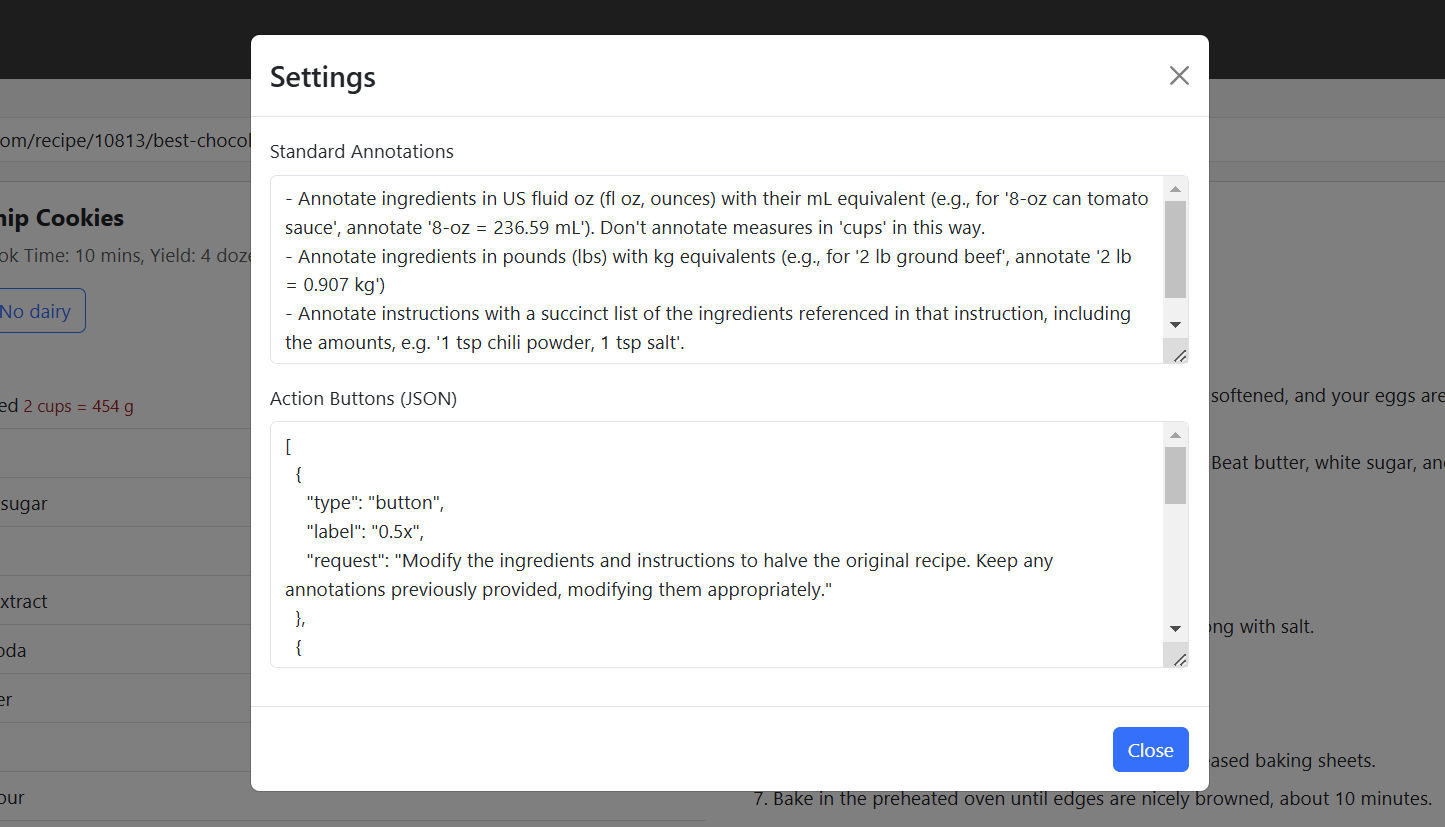

So I added a feature to specify some standard annotations that apply to all extracted recipes:

I've included the unit conversions, and also asked the system to annotate each step of the recipe with the ingredients needed at that step. But these requests are specified in natural language, so it would be super easy for a user to add additional items to meet their individual needs.

I also added support for custom action buttons, to support common changes to a recipe. I added '0.5x' and '2x' buttons to adjust the yield of a recipe, and a 'No dairy' button to suggest dairy substitutions. Similar to the standard annotations, the 'code' for these button is basically just a natural language instruction. For example, the code for the '2x' button is as follows:

{

'type': 'button',

'label': '2x',

'request': 'Modify the ingredients and instructions to double the original recipe. Keep any annotations previously provided, modifying them appropriately.'

}

Wiring up buttons to send natural-language instructions the LLM might seem like a hack, but it's actually an extremely extensible approach. You can imagine individual users adding their own buttons based on their needs.

Insights on building AI-powered user interfaces

This was a fun project to get my feet wet with building LLM-powered user interfaces, but what are some key lessons and takeaways?

Lesson 1: Don’t over fit to anticipated use cases. I originally considered using deterministic code for things like doubling the recipe, or providing unit conversions. But I quickly realized that it’s better to keep the approach general. In the end I made it so the AI model can transform the recipe based on any request, and the presentation format has very general 'annotation' fields associated with each ingredient and instruction. This enables the system to handle possibilities that me, as the developer, never anticipated.

Lesson 2: Make the conversation with the AI model the core of the interaction loop. All interactions (including clicks on the custom action buttons) are essentially just adding to a “conversation” with the LLM, which functions as the 'model' (in the model-view-controller sense) for the interface. The advantage of this approach is that it is really extensible. It also unifies UI interactions (like clicking an action button) and natural language queries. For example, if I accidentally clicked the action button to double the recipe, I could use a message like "undo that last change" to correct it. I believe that unifying natural language voice queries and UI interactions is really important as we think about how to enhance user interfaces with advanced AI. The downside of this approach is latency, since interactions that add to the conversation must function at LLM speeds. It's an interesting question of how you could extend this approach to also provide fast UI updates.

HCI research thoughts

This project is a bit of a provocation, because a lot of content on the web is not created with the experience of users as the top priority. Recipe web pages contain long intro sections for SEO purposes, and are filled with ads to monetize the content. I get that that's how you make money with web content in 2025, but if we're on the cusp of personal AI assistants that can read content as well as humans, and display it back to them just as they like, that model begins to break down. It's not hard to imagine a next-generation web browser powered by AI that stands between you and the web, and has your needs in mind. This isn't a new idea – there is a long history of work in the HCI and ASSETS research communities on adapting content for accessibility purposes, for example. But LLMs make this so easy, that it feels like we are at a turning point. Investigating the implications of these new capabilities (and building prototypes that push these boundaries) are compelling areas for research.

Also interesting is the general model of a fixed and highly personalized UI on top of extremely variable data adapted from diverse formats and sources. How might we empower and suport end users in specifying these personalized UIs? And how do we mitigate the risks that can arise in this model? Because the user is not seeing the underlying content first-hand, there are risks that users could act on hallucinated or incorrect information. Finding ways to present users with information in the format they want while ensuring accuracy and preserving data provenance is another interesting problem.

Technical details

The architecture of the system is pretty straightforward. The front end UI is built with React and JavaScript. The backend is Python using FastAPI, OpenAI, and some Langchain modules for the web scraping.

When a new recipe URL is entered, the system grabs the page HTML, and uses a custom prompt to the LLM to do the extraction. A conversation history with the LLM is started, including the page and extracted recipe, which grows with subsequent requests (including chat messages and clicks on the action buttons).

For any given request, the LLM is prompted to reply with JSON in one of two formats – either a "structured recipe" JSON format that the front-end can render, or a "conversational response" JSON format which gets displayed in the conversation thread.

The system uses OpenAI's gpt-4o-mini as the LLM. I had originally planned to switch to gpt-4o for deployment, but the mini model is actually good enough for this application, and its responsiveness enhances the user interaction.

Wrap Up + Links

Thanks for reading! If anyone is interested in trying the prototype out, the code is available on my github at lafrenben/recipe-assistant.

Thanks to Mark Parent, Jordan Wan, and Tovi Grossman for conversations and feedback while I was playing around on this project.